New

Marketers

Power your team to create

videos at scale

Creators

Building social presence made easy

Agency

Scale video production with

ease

Wan 2.2 is an open-source generative AI video model from Alibaba's DAMO Academy, publicly released on July 28, 2025. It introduces a Mixture-of-Experts (MoE) architecture into the video diffusion model, which significantly enhances model capacity and performance without increasing inference costs. The model is notable for its cinematic-level aesthetics, high-definition 1080p output, and its ability to generate complex, fluid motion with greater control than previous models.

Wan 2.2 is an open-source generative AI video model from Alibaba's DAMO Academy, publicly released on July 28, 2025. It introduces a Mixture-of-Experts (MoE) architecture into the video diffusion model, which significantly enhances model capacity and performance without increasing inference costs. The model is notable for its cinematic-level aesthetics, high-definition 1080p output, and its ability to generate complex, fluid motion with greater control than previous models.

Creates complex, fluid, and natural movements in videos, improving realism and coherence.

Trained on meticulously curated data to produce videos with precise control over lighting, color, and composition.

Generates videos with native 1080p resolution at 24fps, suitable for professional use.

Cinematic Camera Control Generates videos with native 1080p resolution at 24fps, suitable for professional use.

Creates seamless video transitions by interpolating between a specified start and end frame.

A highly-compressed 5B model is available that can run on consumer GPUs like an RTX 4090.

The model is publicly available, allowing for fine-tuning with LoRA and other community-developed tools.

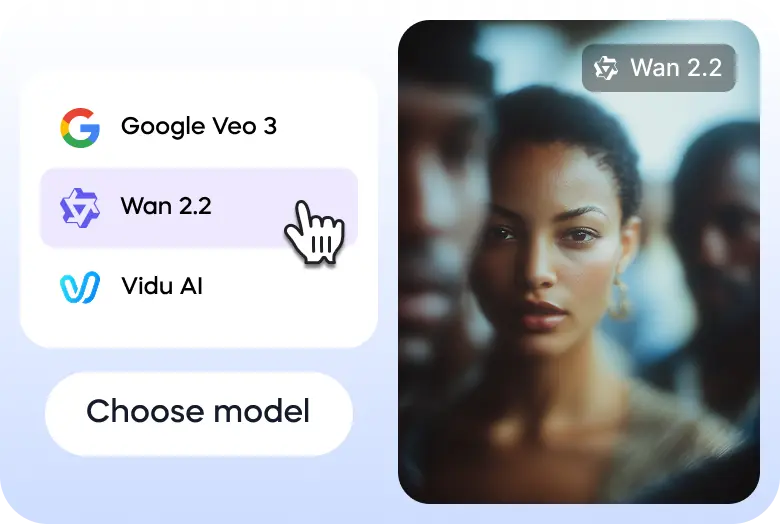

Here are three simple steps to help you explore Wan 2.2 on Vizard:

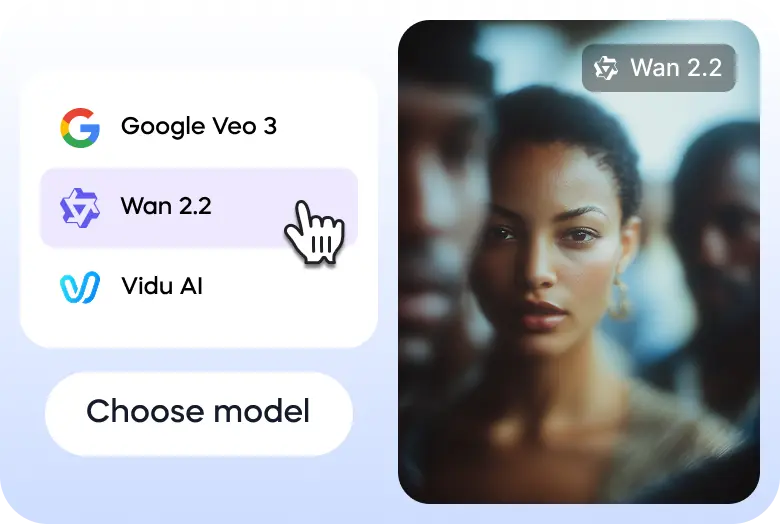

Go to Vizard’s text to video generator and select Wan 2.2 model.

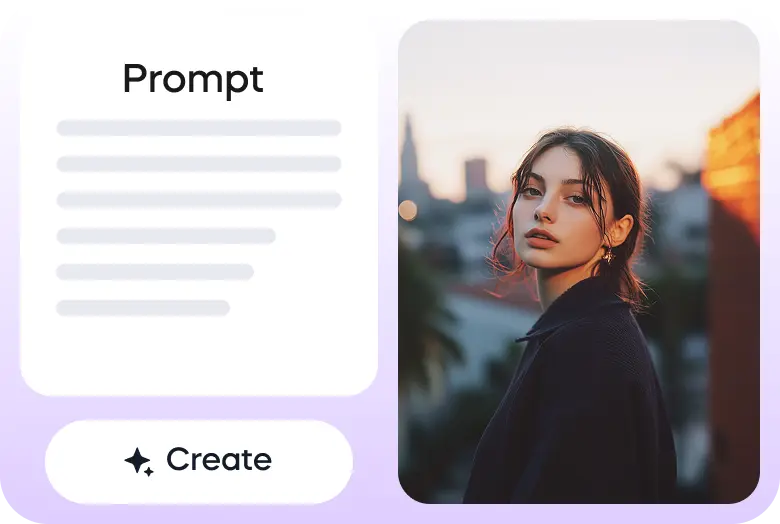

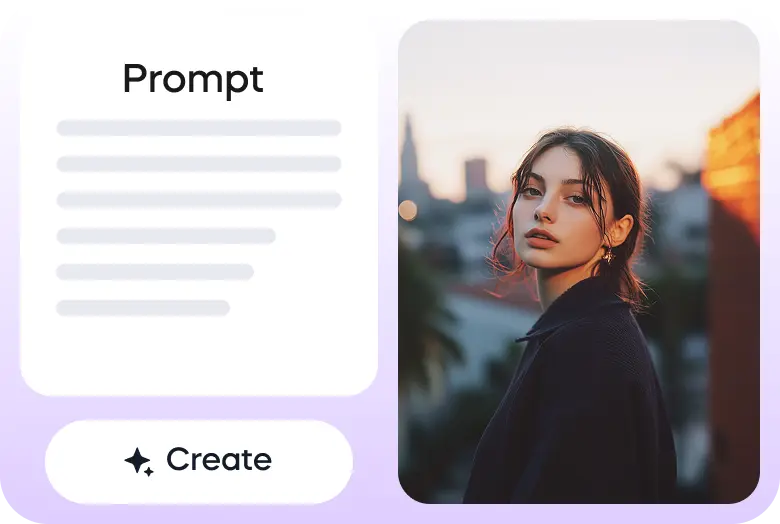

Enter your prompt or upload your image to get started.

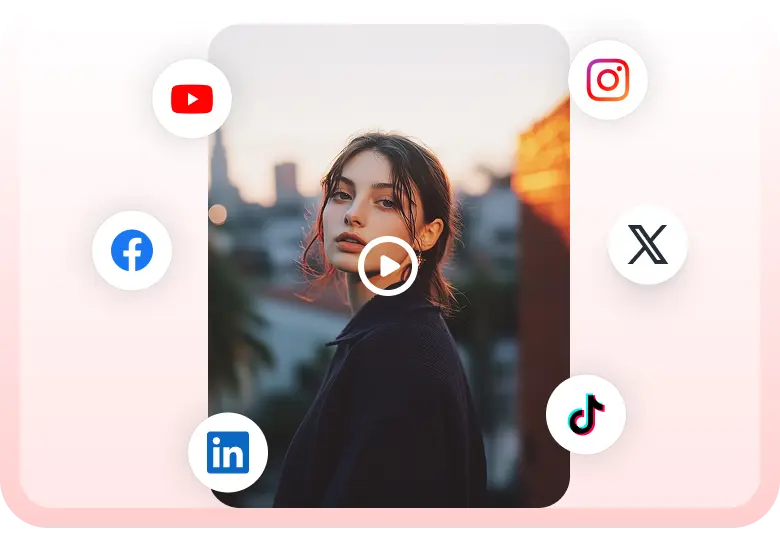

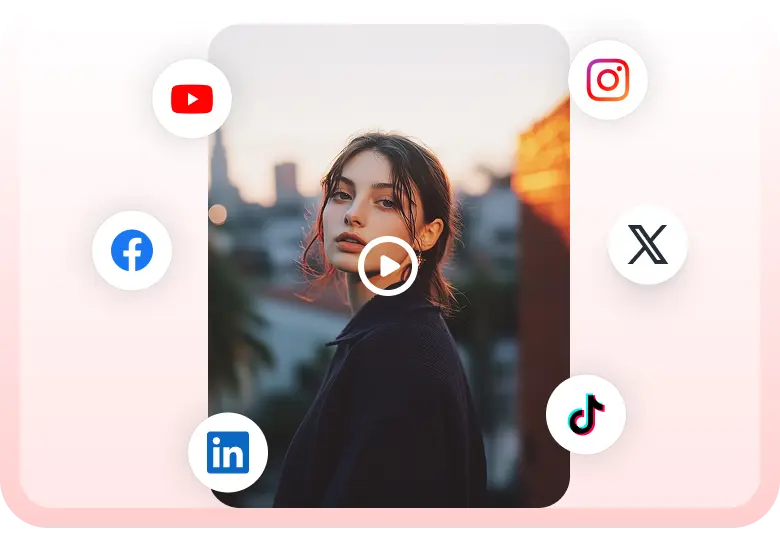

Once the video is ready, you can download it or share it on your social media accounts directly through Vizard.

Wan2.2 The quality is significantly improved, tested some human body performance. Amazing@Alibaba_Wan #wan2.2 pic.twitter.com/ptUXlOS1Ka

— TTPlanet (@ttplanet) July 28, 2025

Fun workflow I was playing with last night

— A.I.Warper (@AIWarper) August 8, 2025

1) Kontext to remove Thor from the shot

2) Photopea to place Shrek

3) Kontext + Relight lora to blend him into the shot

4) Wan2.2 i2V to animate

Very addicting... 😍

Prompts are written up in the corner. Wan 2.2 prompt below 👇 pic.twitter.com/EnWE2OgA7T

Wan2.2(I2V) works pretty good.(Base image is not AI generated.) pic.twitter.com/5g9CfoiqT4

— Xiu Ran (@f_fanshu) July 30, 2025

You can’t skate here sir..

— Ingi Erlingsson 🪄 (@ingi_erlingsson) August 5, 2025

Wan2.2 @Alibaba_Wan + @ComfyUI 🛹 pic.twitter.com/kFaKeoKYNi

I hope you are enjoying your summer 🍸⛱️

— Julian Bilcke (@flngr) July 28, 2025

Because open source AI video is back 💪

Wan2.2 is out!✨ pic.twitter.com/9feAXVC4Hi

Tried doing a wall punching effect using the new Wan2.2 open source model. @Alibaba_Wan @ComfyUI pic.twitter.com/PGKoZmpuso

— enigmatic_e (@8bit_e) July 31, 2025

Tried the Veo3 annotation trick with Wan2.2 14B, 8 steps pic.twitter.com/x8Lnmcx1wg

— Linoy Tsaban (@linoy_tsaban) July 29, 2025

What is Wan 2.2?

Wan 2.2 is a state-of-the-art, open-source generative AI video model developed by Alibaba's DAMO Academy. It is a major upgrade to the foundational Wan video model series, designed to create high-quality, cinematic videos from text and image prompts. The model is known for its advanced motion generation and aesthetic controls.

What version(s) are available?

Wan 2.2 is available in several versions with different capabilities. The core open-source models include the efficient TI2V-5B model, which supports both text-to-video (T2V) and image-to-video (I2V) at 720p resolution and can run on consumer-grade GPUs. There are also more powerful 14B models, such as the T2V-A14B and I2V-A14B, which use a Mixture-of-Experts (MoE) architecture for superior quality and performance, suitable for more robust hardware.

What makes it unique?

Wan 2.2 stands out due to its innovative Mixture-of-Experts (MoE) architecture, which separates the denoising process into specialized stages for better performance without a significant increase in computational cost. It also features cinematic-level aesthetic controls, an ability to generate complex and fluid motion, and a First-Last Frame to Video (FLF2V) function that creates smooth transitions between two images. Its open-source nature allows for community-driven fine-tuning and integration.

Is it safe to use?

As an open-source model, the safety of Wan 2.2 largely depends on how it is implemented and used. The developers have established a usage policy that prohibits the generation of illegal, harmful, or misleading content. While the model itself does not have a built-in content moderation system, developers and platforms using Wan 2.2 are expected to implement their own safeguards to ensure responsible use and compliance with legal and ethical standards.

How fast is it?

Wan 2.2 is highly optimized for speed, particularly its TI2V-5B model, which is one of the fastest available at 720p resolution and 24fps. A 5-second video can be generated in just a few minutes on a consumer GPU like an RTX 4090, with more powerful hardware offering even faster results. The speed is further enhanced by its efficient Mixture-of-Experts (MoE) architecture.

Is it accessible via mobile?

Wan 2.2 is primarily a developer-focused, open-source model. It does not have an official, dedicated mobile app from its producer. However, because it is open-source, developers can integrate it into mobile-friendly web applications or create their own mobile apps. Its consumer-grade GPU compatibility also makes it more accessible to users with high-end mobile workstations.

What can it generate or create?

Wan 2.2 is capable of generating a wide variety of video content, from short-form ads and social media clips to cinematic scenes and animations. Its capabilities include text-to-video, image-to-video, and image-based in-painting. Users can generate videos with specific camera movements, precise aesthetic styles, and realistic motion for characters and objects, making it a versatile tool for both technical and creative projects.

How can it be used?

The most common way to use Wan 2.2 is by downloading the model files and running them locally on a compatible machine, often with integration through platforms like ComfyUI or Diffusers. For a more accessible experience, the model is available via cloud API providers. There is also an opportunity to try Wan 2.2 for free through the Vizard platform, which provides an online interface for experimenting with the model's capabilities.

Wan 2.2 is an open-source generative AI video model from Alibaba's DAMO Academy, publicly released on July 28, 2025. It introduces a Mixture-of-Experts (MoE) architecture into the video diffusion model, which significantly enhances model capacity and performance without increasing inference costs. The model is notable for its cinematic-level aesthetics, high-definition 1080p output, and its ability to generate complex, fluid motion with greater control than previous models.

Creates complex, fluid, and natural movements in videos, improving realism and coherence.

Trained on meticulously curated data to produce videos with precise control over lighting, color, and composition.

Generates videos with native 1080p resolution at 24fps, suitable for professional use.

Cinematic Camera Control Generates videos with native 1080p resolution at 24fps, suitable for professional use.

Creates seamless video transitions by interpolating between a specified start and end frame.

A highly-compressed 5B model is available that can run on consumer GPUs like an RTX 4090.

The model is publicly available, allowing for fine-tuning with LoRA and other community-developed tools.

Here are three simple steps to help you explore Wan 2.2 on Vizard:

Go to Vizard’s text to video generator and select Wan 2.2 model.

Enter your prompt or upload your image to get started.

Once the video is ready, you can download it or share it on your social media accounts directly through Vizard.

Wan2.2 The quality is significantly improved, tested some human body performance. Amazing@Alibaba_Wan #wan2.2 pic.twitter.com/ptUXlOS1Ka

— TTPlanet (@ttplanet) July 28, 2025

Fun workflow I was playing with last night

— A.I.Warper (@AIWarper) August 8, 2025

1) Kontext to remove Thor from the shot

2) Photopea to place Shrek

3) Kontext + Relight lora to blend him into the shot

4) Wan2.2 i2V to animate

Very addicting... 😍

Prompts are written up in the corner. Wan 2.2 prompt below 👇 pic.twitter.com/EnWE2OgA7T

Wan2.2(I2V) works pretty good.(Base image is not AI generated.) pic.twitter.com/5g9CfoiqT4

— Xiu Ran (@f_fanshu) July 30, 2025

You can’t skate here sir..

— Ingi Erlingsson 🪄 (@ingi_erlingsson) August 5, 2025

Wan2.2 @Alibaba_Wan + @ComfyUI 🛹 pic.twitter.com/kFaKeoKYNi

I hope you are enjoying your summer 🍸⛱️

— Julian Bilcke (@flngr) July 28, 2025

Because open source AI video is back 💪

Wan2.2 is out!✨ pic.twitter.com/9feAXVC4Hi

Tried doing a wall punching effect using the new Wan2.2 open source model. @Alibaba_Wan @ComfyUI pic.twitter.com/PGKoZmpuso

— enigmatic_e (@8bit_e) July 31, 2025

Tried the Veo3 annotation trick with Wan2.2 14B, 8 steps pic.twitter.com/x8Lnmcx1wg

— Linoy Tsaban (@linoy_tsaban) July 29, 2025

What is Wan 2.2?

Wan 2.2 is a state-of-the-art, open-source generative AI video model developed by Alibaba's DAMO Academy. It is a major upgrade to the foundational Wan video model series, designed to create high-quality, cinematic videos from text and image prompts. The model is known for its advanced motion generation and aesthetic controls.

What version(s) are available?

Wan 2.2 is available in several versions with different capabilities. The core open-source models include the efficient TI2V-5B model, which supports both text-to-video (T2V) and image-to-video (I2V) at 720p resolution and can run on consumer-grade GPUs. There are also more powerful 14B models, such as the T2V-A14B and I2V-A14B, which use a Mixture-of-Experts (MoE) architecture for superior quality and performance, suitable for more robust hardware.

What makes it unique?

Wan 2.2 stands out due to its innovative Mixture-of-Experts (MoE) architecture, which separates the denoising process into specialized stages for better performance without a significant increase in computational cost. It also features cinematic-level aesthetic controls, an ability to generate complex and fluid motion, and a First-Last Frame to Video (FLF2V) function that creates smooth transitions between two images. Its open-source nature allows for community-driven fine-tuning and integration.

Is it safe to use?

As an open-source model, the safety of Wan 2.2 largely depends on how it is implemented and used. The developers have established a usage policy that prohibits the generation of illegal, harmful, or misleading content. While the model itself does not have a built-in content moderation system, developers and platforms using Wan 2.2 are expected to implement their own safeguards to ensure responsible use and compliance with legal and ethical standards.

How fast is it?

Wan 2.2 is highly optimized for speed, particularly its TI2V-5B model, which is one of the fastest available at 720p resolution and 24fps. A 5-second video can be generated in just a few minutes on a consumer GPU like an RTX 4090, with more powerful hardware offering even faster results. The speed is further enhanced by its efficient Mixture-of-Experts (MoE) architecture.

Is it accessible via mobile?

Wan 2.2 is primarily a developer-focused, open-source model. It does not have an official, dedicated mobile app from its producer. However, because it is open-source, developers can integrate it into mobile-friendly web applications or create their own mobile apps. Its consumer-grade GPU compatibility also makes it more accessible to users with high-end mobile workstations.

What can it generate or create?

Wan 2.2 is capable of generating a wide variety of video content, from short-form ads and social media clips to cinematic scenes and animations. Its capabilities include text-to-video, image-to-video, and image-based in-painting. Users can generate videos with specific camera movements, precise aesthetic styles, and realistic motion for characters and objects, making it a versatile tool for both technical and creative projects.

How can it be used?

The most common way to use Wan 2.2 is by downloading the model files and running them locally on a compatible machine, often with integration through platforms like ComfyUI or Diffusers. For a more accessible experience, the model is available via cloud API providers. There is also an opportunity to try Wan 2.2 for free through the Vizard platform, which provides an online interface for experimenting with the model's capabilities.