Updated: October 1, 2025

What is Sora 2

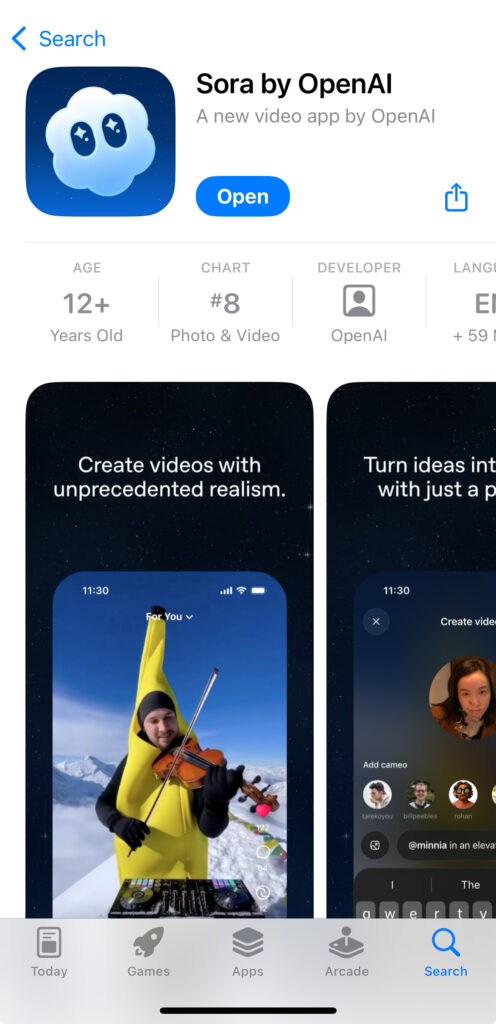

On September 30, 2025, OpenAI introduced Sora 2, a next generation model for AI video with synchronized audio. It increases realism, improves physical consistency, and follows user instructions with higher fidelity than the original Sora. OpenAI’s system card describes sharper realism, more accurate physics, synchronized audio, stronger steerability, and a wider stylistic range. The model powers a new consumer app named Sora that emphasizes creation and sharing of short AI videos.

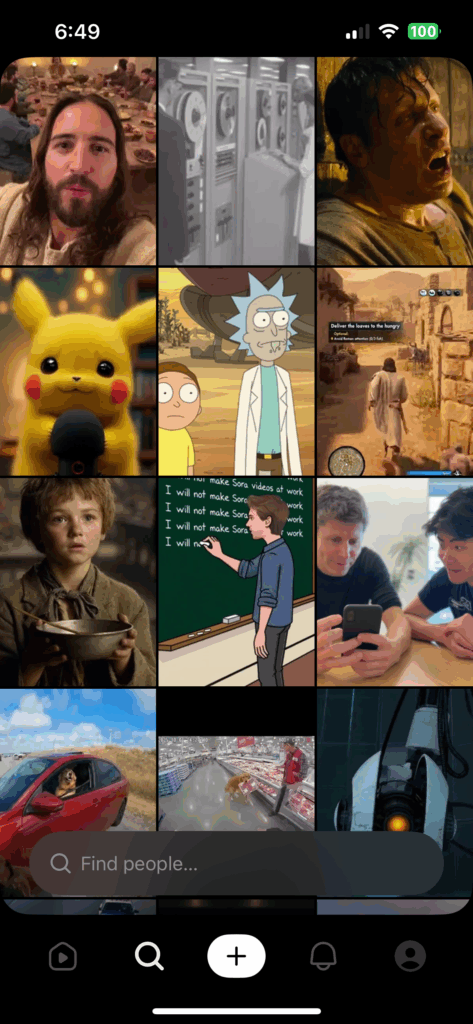

The app behaves like a creative playground. You write a prompt, Sora 2 generates video and audio together, and you can publish inside a vertical feed. Several outlets compare the experience to a TikTok like environment where people create short clips of themselves or friends using AI.

Key launch facts and dates

OpenAI announced Sora 2 and the Sora app on September 30, 2025. Coverage and documentation published that day highlight the model goals and the initial social experience. Early reports also note that the app focuses on very short clips and is in a limited rollout.

On October 1, 2025, additional reporting described how the social experience quickly filled with user experiments, including highly realistic cameos of well known figures that OpenAI attempts to restrict through moderation. These stories underline both the appeal and the challenges of a consumer feed built on generative video.

How to download Sora and request access

Availability matters for traffic and for readers who want to try Sora 2 today. Here is the real world path based on the current rollout.

- Platform and regions The Sora app is live on iOS in the United States and Canada. OpenAI is rolling out access gradually. Android support is planned for the future. If you do not see the app yet, you are not alone. The company is enabling regions and accounts in stages.

- Invite system Access is invite only during the initial period. Early users receive a small number of invites to share. Media monitoring shows high demand and even attempts to resell invites on marketplaces, which OpenAI warns can violate terms. Treat any resold code as risky and prefer official invitations. One user can invite up to 4 users to try out Sora 2.

- Web access and help page OpenAI notes that Sora 2 is also being enabled through sora.com for some users, with a help page that explains the basics and reminds readers that access will expand over time. If you cannot use the app yet, join the waitlist and watch the help center for updates.

- Identity and permissions for Cameos The app can build a private likeness profile after a short capture of your face and voice. You control who can use your likeness, and the app notifies you when a clip is created using it. These controls are central to Sora’s safety design.

What Sora 2 can do

Sora 2 turns a simple text instruction into a short video with matching audio. This means speech, ambient sound, and sound effects that align with what you see on screen. It also targets physical behaviors that previously broke immersion. For example, when a basketball misses the hoop, the ball now rebounds rather than snapping to a wrong position. The effect feels more coherent, especially in action scenes.

OpenAI’s materials emphasize better steerability. You can ask for specific camera movement, lighting, composition, or style, and the model is more likely to follow. The system card also describes stronger continuity across multiple shots inside a single prompt. The net result is a tool that feels closer to a director’s assistant than a one shot generator.

Headline features at launch

- Synchronized audio

Sora 2 generates dialogue and effects that match the video. This closes a major gap for creators who want finished clips without manual audio work. Early reports confirm that audio sync is a signature capability.

2. Improved physics and realism

The model focuses on world dynamics. Motion is more believable, water and fabric behave more naturally, and object interactions read as intentional rather than accidental. This does not eliminate all artifacts, yet it raises the baseline.

3. Cameos

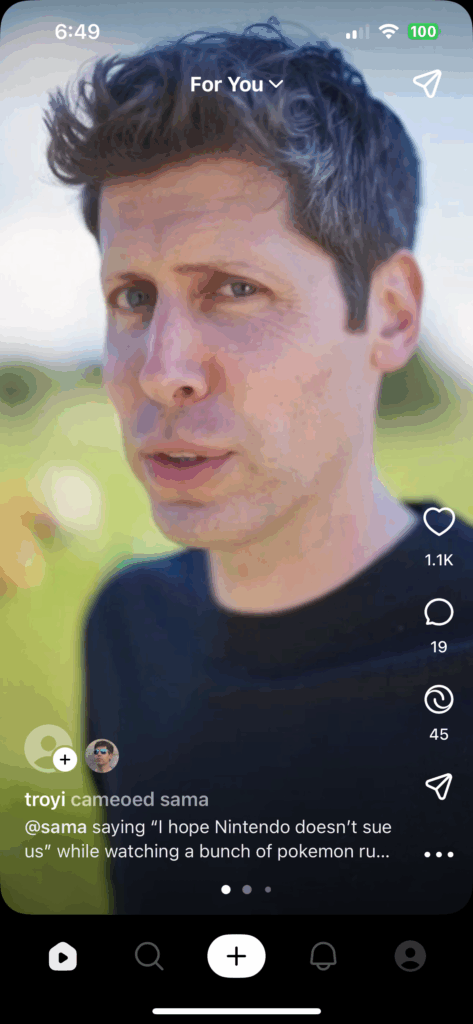

After a one time likeness capture, Sora can insert you or a consenting friend into a generated scene. Controls let you decide who can access your likeness and when to revoke it. This can feel magical and it requires clear boundaries, which the app attempts to provide. Sam Altman’s Cameo is a very popular Cameo on Sora, and you can see videos of him basically everywhere, climbing the El Cap, for example.

4. Social creation and remix

Sora ships with a discovery feed and remix mechanics. People can build on each other’s clips, create chains, and test ideas quickly. The social layer is part of the product, not an afterthought.

5. Safety systems and policy posture

OpenAI describes layered moderation, consent gates for identities, and provenance signals. News coverage also notes a copyright policy that relies on opt out, which has already drawn attention from rights holders. Readers who create branded content should follow the policy conversation and label AI output clearly.

Use cases that already make sense

Short social clips

Creators can craft ten second scenes that feel finished because audio and video arrive together. This is ideal for reaction moments, micro skits, and concept teasers that ride a trend before it cools. Tech press confirms that the app currently centers on very short clips, which suits the feed and the compute budget.

Personal cameos

Friends can appear in the same generated world with voices and faces that feel close to real. Controls help reduce misuse and keep the experience playful. This is a natural path to viral moments, and it is also the area that requires the most care and consent.

Storyboarding and previsualization

Creative teams can pitch ideas with motion and audio in place. A quick sequence can convey timing, camera intent, and tone before anyone books a stage or hires talent. The stronger physics model helps these sketches feel more trustworthy.

Spec advertisements and brand snippets

Marketers can test a hook, a jingle, or a micro narrative. Even if the final campaign uses live action, early Sora versions let teams validate direction and reduce creative risk. Journalists covering the launch highlight that the platform is already pulling in brands and creators who want to experiment in a lower cost way.

Step by step: how to use Sora 2 today

Step one. Get access

Install the Sora app on iOS if you are in the United States or Canada and have an invite. If not, join the waitlist and keep an eye on the help page and the App Store listing because access is expanding over time. Avoid buying invites from resellers since these sales can violate terms.

Step two. Decide whether to enable Cameos

Record a short face and voice sample only if you are comfortable with your likeness appearing in AI scenes. Review the controls that govern who can use it and set notifications that alert you whenever a clip uses your profile.

Step three. Write a precise prompt

Describe the setting, the lighting, the camera movement, the emotion, and the action beats. Sora 2 responds well to concrete details. If you want continuity across two shots, state the link clearly. The system card indicates that longer instructions with clear structure improve fidelity.

Step four. Generate and review

Watch the video once for story and again for physics and lipsync. Note where motion feels odd or where audio lands early or late. Small prompt edits often fix these misses.

Step five. Remix or export

Use the app’s remix tools to iterate, then publish to the feed or save for external platforms. Treat each upload like a public work and add an AI disclosure when appropriate. Coverage of the first day shows how quickly realistic clips can spread, which is powerful and also sensitive for identity and brand safety.

Limits and open questions

Duration and scope

The social app focuses on very short clips in this first phase. That keeps generation times reasonable and makes the feed snappy, although it limits cinematic storytelling. Expect duration to change as the rollout matures.

Moderation and misuse

OpenAI blocks many public figure prompts and provides identity controls, yet early hands on reports still find mixed results. The system is improving, and creators should act responsibly and obtain consent.

Copyright posture

Reuters reports that the company uses an opt out mechanism for rights holders. The industry will continue to test and debate this stance. Brands should keep legal review in the loop and maintain provenance records for important work.

Invite economy

High demand creates an invite market, which is tempting yet risky. Stick to official channels and treat your account as an asset worth protecting.

Our early verdict

Sora 2 feels like a threshold moment for AI video because it brings sound and picture together with a level of control that supports real creative intent. The short clip focus fits the social format and gives creators a place to practice and to learn. The combination of Cameos, improved physics, and a discovery feed makes creation feel personal and fast. At the same time, the product inherits significant questions about safety and copyright. The best strategy for teams is simple. Learn the tool now, publish with clear disclosure, and create a review gate for sensitive content. This balanced approach lets you move quickly without ignoring real world risks.