As a Product Manager for an AI-powered video editing tool, the announcement of OpenAI’s GPT-5.2 API is less about abstract AI progress and more about a direct impact on my roadmap, feature set, and competitive edge. After reviewing the technical specs, developer guides, and early benchmarks, here’s my third-party analysis of what this release truly means for our industry.

The Strategic Upside: New Levers for Product Differentiation

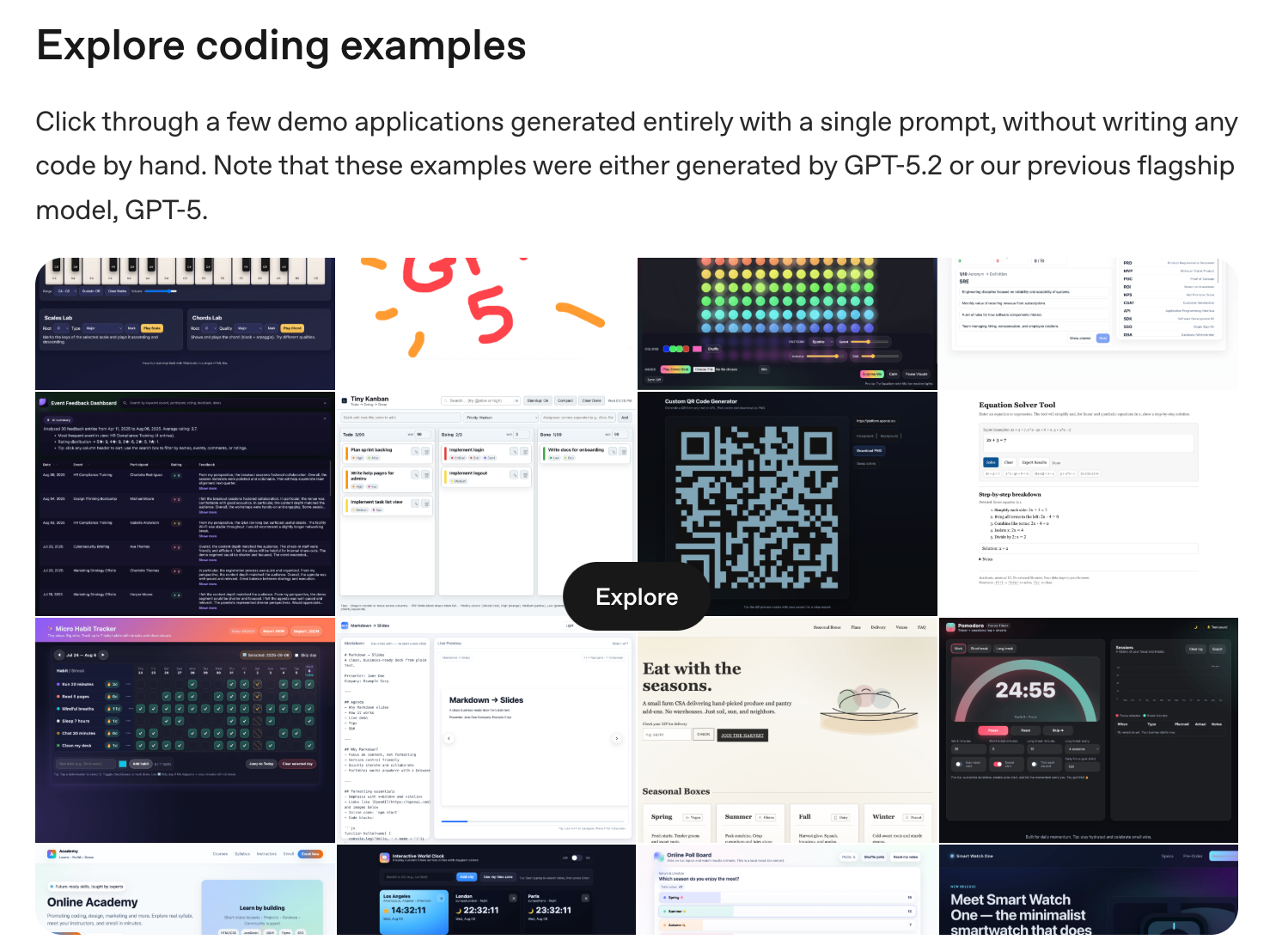

From a product lens, GPT-5.2 isn’t just a better model; it’s a new toolbox that allows us to solve previously intractable user problems.

1. The “Visual Reasoning” Breakthrough is a Game-Changer.

The cited >50% reduction in chart and UI understanding errors is the single most significant datum for video. This isn’t about describing an image; it’s about understanding context within a frame. For my product, this directly translates into roadmap features that were previously too unreliable:

- Precision Logging & Analysis: We can now build agents that watch a rough cut and automatically generate a meaningful log: “0:45 – wide shot, three subjects, subject on left looks off-camera.” This moves us from keyword tagging to narrative understanding.

- Intelligent Assistant Integration: An in-app AI assistant can now reliably “see” the user’s timeline, composition panel, or effect controls. A user could ask, “Why does this color grade look flat?” and the AI, by analyzing the UI state and waveform, could respond, “Your mid-tones are compressed. Try increasing the contrast in the Lumetri panel’s mid-tone slider.”

2. Long-Context & Tool Use Enables End-to-End Workflows.

GPT-5.2’s prowess in long-horizon tool use (evidenced by its 98.7% on the Tau²-bench Telecom) is the foundation for “agentic” features. Instead of isolated AI tools (a transcriptor, a caption generator), we can design cohesive workflows:

- “Create a Social Recap” Agent: A user could highlight a 30-minute interview. The AI agent could autonomously: (1) generate a transcript, (2) analyze it for key moments, (3) call an internal tool to create subclips, (4) draft voiceover script for highlights, and (5) propose a storyboard. This is a sellable, premium feature.

3. Instruction Following & Compact Reasoning Raise the Quality Floor.

Improved instruction following means our prompt engineering for core features (auto-chaptering, summary generation, style-matching) becomes more stable. Outputs will more consistently adhere to brand voice guidelines or requested formats (e.g., “output as a JSON timeline”). The new xhigh reasoning effort level gives us a dial to turn for our most demanding pro users analyzing complex documentaries or multi-camera edits.

The Real-World Constraints: A PM’s Reality Check

The excitement is tempered by immediate practical considerations that will shape our integration strategy.

1. The Cost Equation Demands Feature Gatekeeping.

At $14 per million output tokens (40% more than GPT-5.1), GPT-5.2 cannot be a default model for all user interactions. Its use must be strategically reserved. My immediate calculus:

- High-Value Features Only: Deep narrative analysis, agentic workflows, and complex visual Q&A become candidates for GPT-5.2, likely behind a “Pro AI” tier or credit system.

- Hybrid Model Approach: We’ll maintain a architecture that routes simple tasks (basic description, tag generation) to

gpt-5-minior a similar cost-optimized model, and reserve GPT-5.2 for where its advanced capabilities are non-negotiable. The 90% discount on cached inputs is critical here for repeat analyses on the same footage.

2. API Design Shifts Require Engineering Investment.

The strong push toward the new Responses API (for efficient reasoning chain persistence) and new parameters like verbosity and reasoning.effort necessitate a non-trivial update to our AI integration layer. This isn’t a simple model name swap. The migration guide from gpt-4.1 to gpt-5.2 with none reasoning is a starting point, but optimizing for the new features requires dedicated sprint cycles.

3. The “Visual Model” Claim Needs Rigorous In-House Testing.

While the benchmarks are promising, video is a uniquely messy domain with fast cuts, dynamic scenes, and creative intent. We must immediately begin a validation cycle:

- Test Suite Creation: We will build a test suite of complex frames (busy crowds, nuanced emotions, stylized color grades) and UI states from our own software to benchmark GPT-5.2 against our current model.

- Latency Checks: The

noneandlowreasoning settings will be tested for real-time assistant applications to ensure UI responsiveness isn’t degraded.

Competitive Landscape & Positioning

Adopting GPT-5.2 is also a strategic positioning move.

- For Us (AI-Native Editor): It allows us to leapfrog from offering “AI features” to offering “AI-native workflows,” creating a tangible gap between us and simpler, template-based competitors.

- For Challengers: Competitors using older models (GPT-4, or even GPT-5.1) will find their visual analysis and workflow automation noticeably less coherent and reliable. We can market this difference.

- The Open-Source Counter: While open-source vision-language models are advancing, GPT-5.2’s integrated, production-ready tool-calling and long-context reasoning represent a cohesive package that is still hard to replicate in-house for a product team.

The Verdict and Next Steps

GPT-5.2 is a mandatory, but carefully managed, upgrade for a serious AI video editing product. The capabilities it unlocks align directly with high-user-value, high-complexity problems in video editing.

Our immediate action plan:

- Technical Spike: Allocate one sprint for the engineering team to prototype integration with the Responses API and test the core visual reasoning capabilities.

- Feature Re-prioritization: Revisit the Q1 roadmap. A feature like “AI Assistant that sees your timeline” just moved from “research” to “design.”

- Business Model Review: Begin modeling how the new cost structure affects our unit economics for different user tiers and how we might package the advanced capabilities.

The frontier of AI video editing just moved. GPT-5.2 provides the tools to build not just smarter features, but a more intelligent, cohesive, and ultimately more valuable product. The race now is for the best implementation.